Modern Systems Fail at the Gaps, Not the Components

Modern engineering teams rarely struggle because a tool is missing.

They struggle because tools don’t work together as a system.

CI/CD pipelines may be green, Kubernetes clusters may be healthy, and models may show strong offline metrics — yet production incidents still occur. In 2026, this gap is no longer accidental. It is structural.

As software systems increasingly embed machine learning, the boundaries between CI/CD, Kubernetes orchestration, and MLOps workflows are collapsing. Teams that continue to treat them as separate disciplines face slower releases, fragile deployments, and failures that are hard to diagnose. Teams that integrate them gain speed without sacrificing reliability.

Why Traditional CI/CD Assumptions Break in AI-Driven Systems

Classic CI/CD pipelines were built around deterministic software. A build either passes or fails. Tests either succeed or break. Once deployed, behavior is largely predictable.

Machine learning systems violate these assumptions at every stage.

A model can pass all automated checks and still perform poorly once exposed to real-world data. Data distributions shift silently. Feedback loops amplify small errors. Rollbacks are not always straightforward, because reverting a model does not revert the data it has already influenced.

This forces a fundamental shift in how CI/CD pipelines are designed. Pipelines can no longer validate only code correctness; they must also validate behavioral safety, data quality, and risk exposure.

Kubernetes as the Shared Control Plane for CI/CD and MLOps

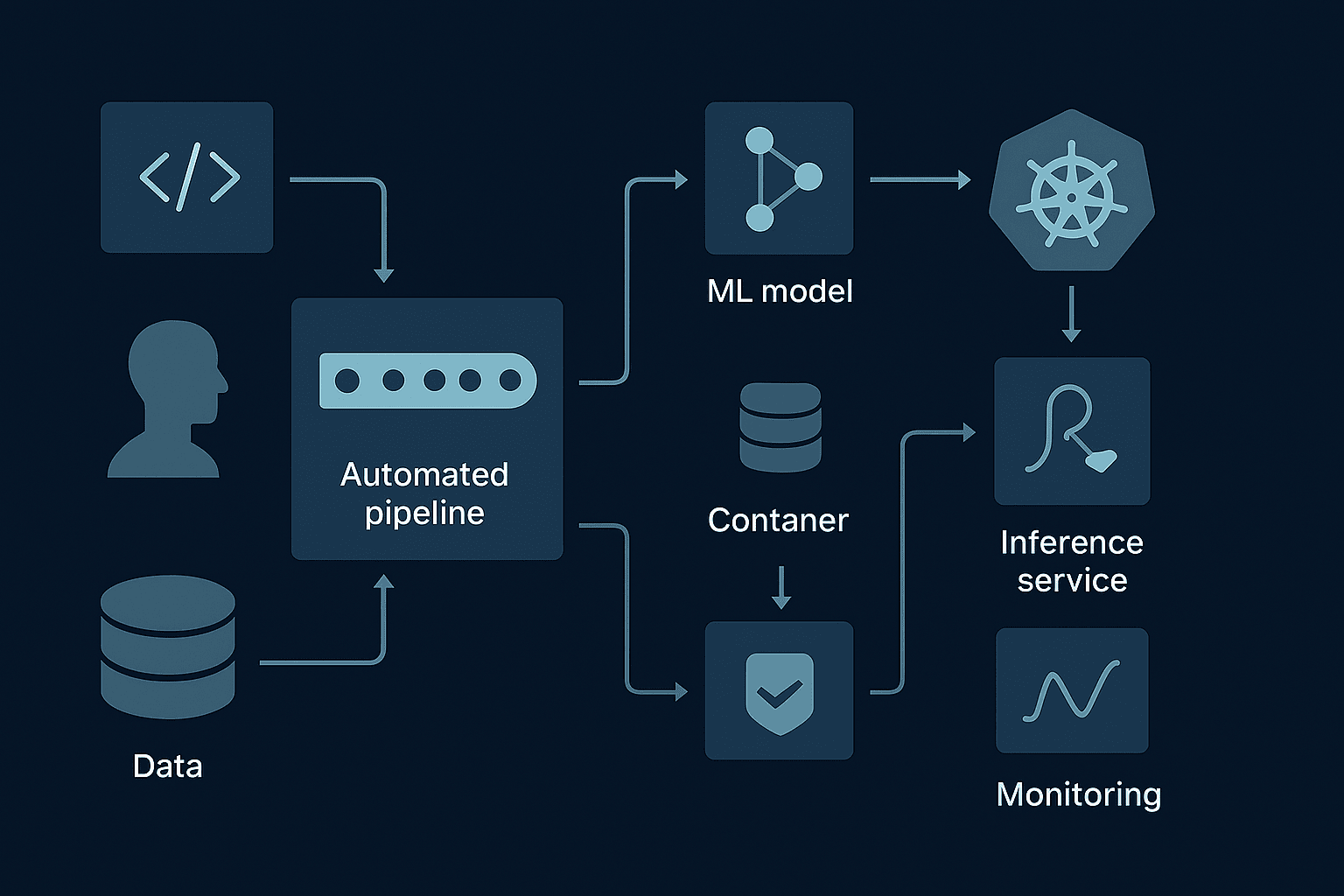

Kubernetes has evolved far beyond container scheduling. In many organizations, it has become the operational backbone that connects CI/CD execution and MLOps workflows.

Training jobs, batch evaluations, feature generation, and inference services all run within the same orchestration environment. This creates a consistent execution model across experimentation and production, reducing the friction that historically existed between ML teams and platform engineers.

More importantly, Kubernetes provides isolation boundaries that are essential for safe experimentation. Different model versions can run side by side. Canary deployments become practical. Resource limits prevent runaway training jobs from destabilizing shared infrastructure.

In effect, Kubernetes becomes the place where code, models, and infrastructure converge.

The unpredictability is not a flaw of machine learning itself but a reflection of how generative and data-driven systems fundamentally differ from traditional software in how they learn and adapt.

The Evolution of CI/CD Pipelines: From Code Delivery to System Readiness

In 2026, mature CI/CD pipelines look very different from their predecessors.

A single pipeline may now orchestrate:

- Application code builds

- Infrastructure changes

- Feature store updates

- Model version promotions

- Inference configuration rollouts

Rather than ending with deployment, pipelines increasingly include post-deployment validation stages. Models are evaluated against live traffic in shadow mode. Predictions are compared against baselines. Automated policies decide whether traffic can be increased or must be rolled back.

This shift reflects a deeper realization:

deployment is no longer the end of risk — it is the beginning of observation.

MLOps Is Becoming an Extension of Platform Engineering

Early MLOps initiatives often lived in isolation, owned by small specialist teams. That model no longer scales.

As machine learning becomes embedded in core business workflows, its operational concerns overlap heavily with platform engineering. Model failures trigger the same on-call rotations as service outages. Latency spikes matter as much as accuracy drops. Security, compliance, and auditability apply equally to models and microservices.

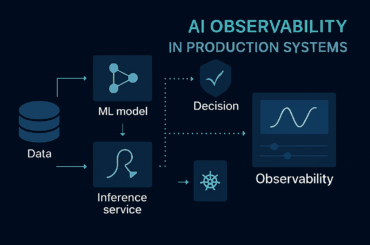

In response, organizations are aligning MLOps tooling with existing DevOps and platform practices. Training pipelines trigger CI workflows. Model registries integrate with deployment systems. Observability spans infrastructure metrics and prediction quality in a single view.

This convergence reduces handoffs and clarifies ownership — two of the biggest sources of production risk.

GitOps as the Unifying Pattern

One of the most important trends shaping this convergence is the rise of GitOps as a unifying operational model.

By treating Git as the source of truth, teams gain:

- Clear audit trails

- Reproducible environments

- Safer rollbacks

- Enforced review processes

In modern setups, Git repositories often reference not only Kubernetes manifests but also model versions, feature flags, and access policies. Changes to AI behavior become explicit, reviewable, and reversible.

This matters because AI systems fail quietly. GitOps introduces intentional friction — slowing down dangerous changes while still enabling rapid iteration.

As more organizations move machine learning models into live environments, the operational challenges no longer stop at experimentation. Production AI systems now influence user experiences, business decisions, and automated workflows at scale.

Real Production Scenarios Where Convergence Matters

In continuous model delivery systems, new data can trigger retraining automatically. The resulting model is evaluated, deployed to a shadow environment, and gradually exposed to users — all through a coordinated CI/CD and Kubernetes workflow. Failures are detected early, before they become incidents.

In high-risk domains, canary deployments and real-time monitoring ensure that model updates do not introduce bias, drift, or unacceptable error rates. Kubernetes handles isolation and scaling, while CI/CD governs promotion logic.

In large-scale inference platforms, pipelines manage version consistency while Kubernetes absorbs traffic spikes. MLOps tooling monitors output quality, ensuring performance degrades gracefully instead of catastrophically.

These are not experimental patterns — they are becoming standard practice.

Security and Governance Are No Longer Optional Add-Ons

As pipelines gain more power, so do the risks.

Modern systems increasingly enforce identity-aware CI/CD pipelines, signed artifacts, and policy-driven Kubernetes deployments. Sensitive changes require human approval, and every promotion is traceable.

This is particularly important as AI systems gain autonomy. When models trigger downstream actions, governance must be built into the delivery process, not bolted on afterward.

What This Means for Engineering Teams in 2026

For developers, this convergence means understanding more than application code. Awareness of data flows and model behavior becomes part of responsible engineering.

For platform teams, it means designing abstractions that simplify workflows without hiding risk. Kubernetes platforms must support experimentation and safety simultaneously.

For ML engineers, it means treating production readiness as a first-class concern. Accuracy alone is no longer sufficient; reliability and observability matter just as much.

Final Thoughts: Systems Thinking Wins

The most successful teams in 2026 are not the ones with the best individual tools. They are the ones that design for the system as a whole.

CI/CD, Kubernetes, and MLOps are no longer parallel tracks. They form a continuous loop where building, deploying, observing, and learning happen together.

Teams that embrace this reality move faster with fewer surprises.

Teams that don’t spend their time debugging gaps between systems.

FAQs

Traditional CI/CD pipelines validate code correctness but not model behavior. Machine learning systems can pass tests and still fail in production due to data drift, bias, or unpredictable real-world inputs, requiring additional validation stages beyond standard builds.

Kubernetes provides a consistent execution environment for training jobs, batch processing, and inference services. It enables model version isolation, scalable deployments, canary releases, and resource control, making it well-suited for production MLOps workflows.

GitOps is an operational model where Git repositories act as the source of truth for deployments. For AI systems, GitOps improves auditability, enables safe rollbacks, and ensures that model versions, configurations, and infrastructure changes are reviewable and traceable.

No. MLOps extends DevOps principles to machine learning systems. As AI becomes part of core applications, MLOps increasingly integrates with CI/CD and platform engineering rather than operating as a separate discipline.