Why AI Systems Break in Places No One Looks

When an AI model fails in production, the first instinct is to blame the model.

Maybe it needs retraining.

Maybe the architecture is wrong.

Maybe the algorithm isn’t advanced enough.

But in most real production environments, the problem starts much earlier — long before the model ever sees data. It starts with features.

Features are the raw signals that models learn from: user behavior, transaction history, device metadata, time-based patterns, contextual attributes. They are the inputs that shape every prediction. And yet, in many organizations, features are treated as temporary artifacts rather than long-term infrastructure.

This is why feature stores have quietly become one of the most important components of production AI systems in 2026. Not because they are trendy — but because they solve problems teams keep rediscovering the hard way.

What a Feature Store Really Is

At a practical level, a feature store is a central system for defining, storing, and serving machine learning features consistently across training and production.

But that definition alone undersells its importance.

A feature store is not just a database. It is a contract between data engineering, machine learning, and production systems. It ensures that when a model is trained on a feature, that exact same logic is used when the model runs in real time.

Without this contract, teams unknowingly create two different realities:

- One during training

- Another during inference

And models fail precisely in the gap between the two.

The Silent Killer: Training–Serving Skew

One of the most common production issues in machine learning is training–serving skew.

This happens when:

- Features are calculated one way during training

- And slightly differently during inference

The difference might be small — a rounding change, a missing filter, a delayed data source — but the impact can be massive.

In early-stage systems, this goes unnoticed. As traffic grows and models influence real decisions, the skew turns into:

- Accuracy decay

- Unexplainable behavior

- Loss of trust in AI outputs

Feature stores exist largely to eliminate this class of problems entirely.

Why Feature Stores Matter More in 2026 Than Before

AI systems today are very different from those of just a few years ago.

Models are:

- Larger and more expensive to run

- Integrated into user-facing workflows

- Deployed continuously via CI/CD pipelines

- Subject to regulatory and compliance scrutiny

At the same time, organizations are running multiple models across teams, often using overlapping data. Without shared feature infrastructure, chaos emerges quietly.

Feature stores provide:

- Reuse instead of reinvention

- Consistency instead of drift

- Governance instead of guesswork

In 2026, they are no longer optional for serious production systems.

The Real Problems Feature Stores Solve (Beyond Theory)

Feature Duplication Across Teams

Without a feature store, each team builds its own version of “user activity,” “account risk,” or “engagement score.” These versions slowly diverge.

Feature stores encourage teams to:

- Share feature definitions

- Reuse validated logic

- Build once, use everywhere

This reduces cost and improves reliability.

Slower Experimentation Than Expected

Ironically, teams without feature stores often move slower, not faster.

Every new experiment requires:

- Rebuilding pipelines

- Revalidating logic

- Debugging inconsistencies

With a feature store, experimentation becomes incremental. Teams focus on modeling — not plumbing.

Debugging That Feels Impossible

When a model behaves oddly in production, teams often can’t answer basic questions:

- What data did it see?

- Which features mattered?

- How did this input differ from training?

Feature stores preserve feature lineage, making post-incident analysis realistic instead of speculative.

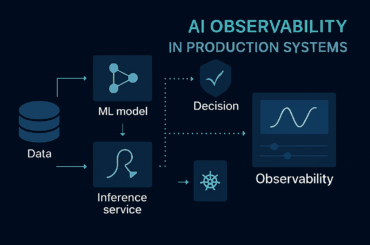

One of the reasons feature-related issues are so difficult to diagnose is that they rarely fail loudly. Models continue running, predictions keep flowing, and infrastructure metrics stay green — even as behavior quietly drifts. This is where teams begin to realize that feature consistency alone isn’t enough; they also need visibility into how models behave once deployed. In practice, feature stores and AI observability evolve together, forming the backbone of reliable production AI systems.

Online vs Offline Feature Stores: Why One Is Not Enough

A mature feature store architecture usually includes two complementary layers.

Offline feature stores handle:

- Historical data

- Large-scale batch processing

- Model training and backtesting

Online feature stores handle:

- Real-time inference

- Low-latency lookups

- Production requests

The key insight is that both layers share the same feature definitions. This shared logic is what prevents skew and inconsistency.

Teams that try to operate with only one layer eventually hit performance or reliability limits.

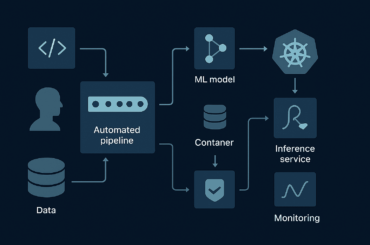

Feature Stores in Kubernetes-Based Production Environments

In 2026, most production AI systems run on Kubernetes. Feature stores have adapted accordingly.

Modern deployments treat feature stores as:

- First-class services

- Versioned and observable components

- Integrated with CI/CD pipelines

Kubernetes enables:

- Independent scaling of feature services

- Isolation across teams and workloads

- Better fault tolerance

However, Kubernetes alone does not solve feature consistency. It simply provides the execution environment. The discipline still comes from the feature store itself.

Feature Stores and Generative AI: An Overlooked Connection

Generative AI systems often appear less structured than traditional ML, but they still rely heavily on features.

Examples include:

- User context for personalization

- Retrieval metadata for RAG systems

- Conversation embeddings

- Behavioral signals over time

Without a feature store, these inputs are scattered across services and databases. This fragmentation makes generative systems harder to debug, harder to govern, and harder to trust.

Feature stores bring order to this complexity by treating context as shared infrastructure rather than ad-hoc state.

While generative AI systems often feel unstructured compared to traditional machine learning, they still depend heavily on consistent inputs — from user context to retrieval signals. As generative models move from experimentation to production, the same foundational challenges emerge: reproducibility, governance, and trust. These challenges mirror many of the broader patterns seen across modern generative AI systems as they scale beyond demos into real-world applications.

Governance, Explainability, and Trust

As AI systems increasingly influence decisions that affect users, governance is no longer optional.

Feature stores support governance by enabling:

- Feature-level audit trails

- Reproducible training runs

- Clear ownership of feature definitions

- Faster compliance reviews

When regulators or internal auditors ask “Why did the model behave this way?”, feature stores help teams answer with evidence instead of assumptions.

When You Actually Need a Feature Store (And When You Don’t)

Not every AI project needs a feature store on day one.

You likely do need one if:

- Multiple models share similar inputs

- Training and inference pipelines differ

- Models degrade unexpectedly in production

- Teams struggle with reproducibility or audits

- AI outputs influence real users or revenue

You may not need one yet if:

- You are experimenting offline

- You run a single model with static data

- Production impact is minimal

The mistake teams make is waiting too long, not adopting too early.

What Feature Store Maturity Looks Like

Low maturity systems:

- Hard-coded feature logic

- Manual pipelines

- Reactive debugging

- Tribal knowledge

High maturity systems:

- Centralized feature definitions

- Automated validation

- Observability baked in

- Clear ownership and governance

The difference shows up not in demos, but in operational confidence.

Final Thoughts: Reliable AI Starts Before the Model

Feature stores don’t make AI more impressive on the surface. They make it predictable, explainable, and scalable.

In 2026, teams that invest in feature infrastructure ship AI faster — not slower — because they spend less time fixing invisible problems.

If models are the brain of AI systems, feature stores are the nervous system. Without them, everything looks fine… until it doesn’t.

FAQs

Feature stores are important because they eliminate training–serving skew, improve feature reuse across teams, and provide governance and traceability for production machine learning systems.

Databases store raw data, while feature stores manage derived features with defined logic, versioning, and consistency guarantees across training and inference workflows.

Training–serving skew occurs when features used during model training differ from those used during production inference, leading to degraded performance and unpredictable behavior.

Not all systems need a feature store. Feature stores are most valuable when multiple models share features, inference is real-time, or production reliability and governance are critical.

Yes. Feature stores commonly run in Kubernetes environments and integrate with CI/CD pipelines, scalable inference services, and cloud-native observability tools.