When “It Deployed Successfully” Stops Meaning Anything

In traditional software, deployment is a milestone.

In AI systems, deployment is a risk event.

Models don’t fail loudly. They degrade quietly. Accuracy slips, bias creeps in, latency spikes, and decisions drift away from reality — often without triggering alerts. By the time someone notices, the damage is already done.

This is why AI observability has become one of the most critical production concerns in 2026. It is no longer enough to monitor infrastructure or application health. Teams must understand how models behave in the real world, continuously and contextually.

What AI Observability Really Means (Beyond Logging and Metrics)

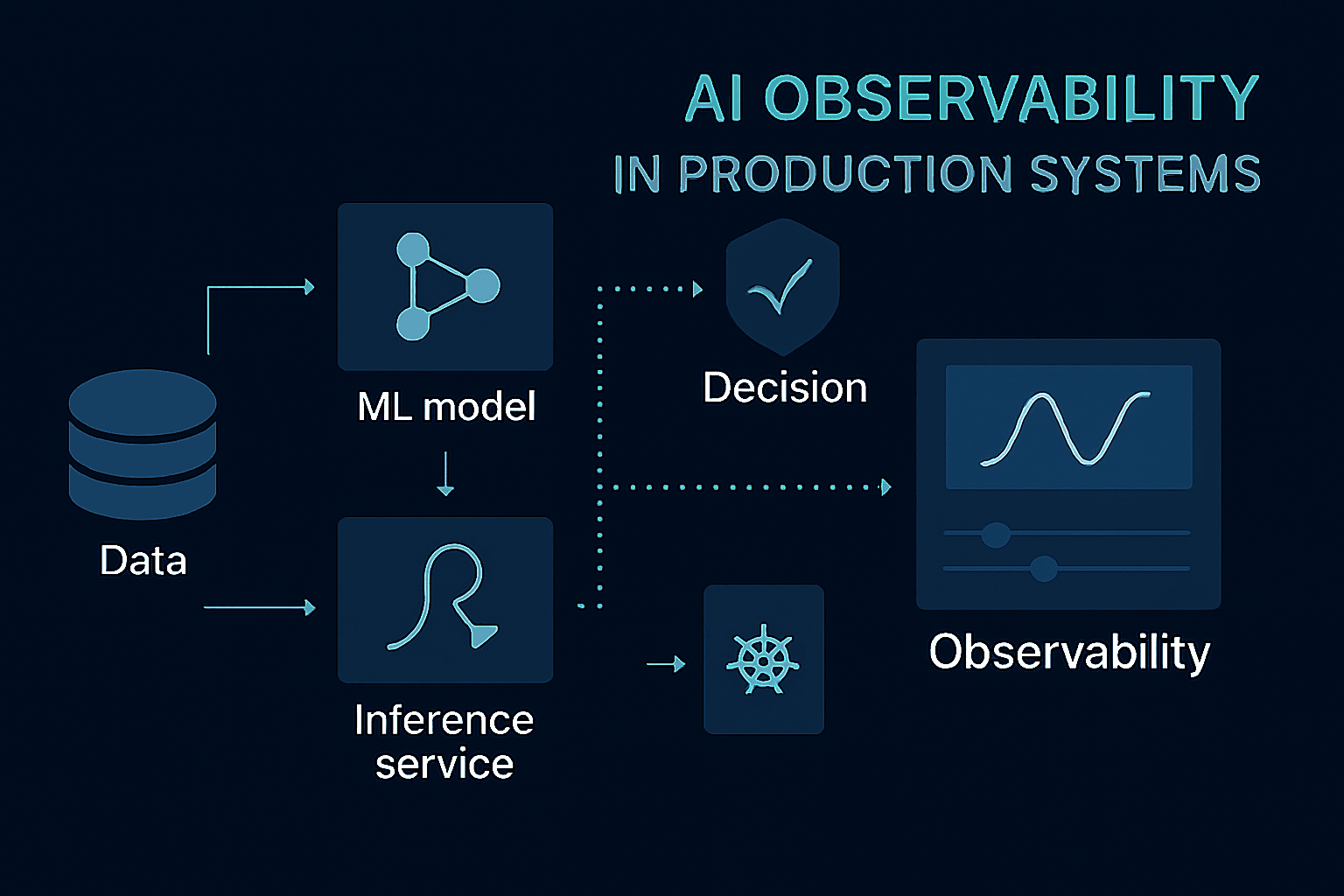

AI observability is the ability to:

- Understand what a model is doing

- Explain why it made a decision

- Detect when behavior changes

- Act before users are affected

Unlike traditional observability, AI observability spans multiple layers:

- Data quality

- Model performance

- System behavior

- Business impact

The challenge is that failures often occur between these layers, not within a single one.

Why Traditional Monitoring Fails for AI Systems

Most organizations start by extending existing observability tools to AI workloads. This approach quickly breaks down.

Infrastructure metrics may show healthy pods while predictions become unreliable. Application logs may look clean while data distributions drift silently. Accuracy metrics may appear stable while edge cases explode in production.

The core issue is that AI systems are probabilistic, not deterministic. Observability must reflect that reality.

The Four Pillars of AI Observability in 2026

1. Data Observability: Watching the Input, Not Just the Output

Data drift is one of the most common causes of model failure. Changes in user behavior, upstream systems, or data pipelines can invalidate assumptions the model was trained on.

Modern teams monitor:

- Feature distributions

- Missing or malformed inputs

- Schema changes

- Sudden shifts in data volume or patterns

The goal is early warning — not postmortem analysis.

2. Model Performance Observability: Beyond Accuracy Scores

Offline metrics rarely reflect production reality.

In 2026, teams track:

- Prediction confidence trends

- Error rates across segments

- Fairness and bias indicators

- Performance decay over time

Crucially, these metrics are evaluated in context, not isolation. A small accuracy drop in a critical segment may matter more than a global average.

3. System Observability: Latency, Cost, and Reliability

AI systems introduce new operational risks:

- Cold starts

- Resource spikes

- Inference latency under load

- Cost overruns from inefficient models

Observability must connect:

- Model behavior

- Infrastructure utilization

- User experience

Without this connection, teams optimize the wrong layer.

4. Decision Observability: Understanding Impact, Not Just Predictions

The most advanced teams observe decisions, not just predictions.

They ask:

- What actions did the model trigger?

- How often were predictions overridden?

- What business outcomes followed?

This closes the loop between AI systems and real-world impact.

AI Observability in Kubernetes-Based Production Environments

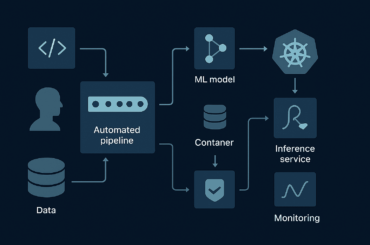

Kubernetes has become the default runtime for AI inference, which introduces both advantages and complexity.

On the plus side:

- Standardized deployment patterns

- Built-in scaling and isolation

- Integration with existing observability stacks

On the downside:

- Short-lived pods complicate tracing

- Model versions coexist

- Metrics must be correlated across layers

Successful teams treat Kubernetes as the execution layer, not the observability solution itself.

How AI Observability Fits into CI/CD and MLOps Workflows

In mature environments, observability is not an afterthought — it is part of the delivery pipeline.

Common patterns include:

- Blocking model promotion if observability hooks are missing

- Comparing live metrics against pre-deployment baselines

- Automatically triggering rollback or shadow deployments

- Feeding observability insights back into retraining workflows

This turns observability into a control mechanism, not just a dashboard.

Real-World Failure Patterns Observability Helps Prevent

- Silent Degradation: Models appear stable but gradually lose relevance as user behavior changes.

- Feedback Loops: Predictions influence data collection, reinforcing bias or error.

- Overconfidence at the Edges: Global accuracy hides failures in minority or high-risk segments.

- Cost Blindness: Inference costs rise unnoticed until budgets are exceeded.

Observability doesn’t eliminate these risks — it makes them visible early.

Governance, Trust, and the Human-in-the-Loop

As AI systems become more autonomous, trust depends on transparency.

In 2026, responsible teams:

- Maintain audit trails for predictions

- Enable human review for high-impact decisions

- Document model limitations

- Make observability accessible beyond ML teams

AI observability is as much about organizational trust as technical insight.

What AI Observability Maturity Looks Like

Low maturity:

- Basic logs

- Offline accuracy checks

- Reactive debugging

High maturity:

- Continuous monitoring across data, models, and outcomes

- Proactive alerts tied to business risk

- Integrated with CI/CD and incident response

- Clear ownership and accountability

The difference is not tooling — it’s intent.

Final Thoughts: You Can’t Control What You Can’t See

AI systems fail in subtle ways.

Observability is how teams regain control.

In 2026, the question is no longer whether you need AI observability — it’s whether you’re comfortable operating blind.

Teams that invest early move faster with confidence.

Teams that don’t learn only after something breaks.

FAQs

AI observability is important because machine learning models can degrade without triggering traditional alerts. Without observability, teams may deploy models that appear healthy while producing incorrect, biased, or costly outcomes in real-world usage.

Traditional monitoring focuses on infrastructure and application metrics like uptime and latency. AI observability also tracks model-specific signals such as data drift, prediction confidence, accuracy decay, and decision outcomes, which are unique to AI systems.

AI observability helps prevent silent model degradation, biased predictions, feedback loops, cost overruns, and delayed incident detection in production AI systems.

The core components of AI observability are data observability, model performance monitoring, system observability, and decision or business impact tracking.