The landscape of Artificial Intelligence (AI) is evolving at an unprecedented pace. From foundational models to multimodal assistants, the convergence of deep learning, natural language understanding, and generative capabilities is redefining how enterprises build, scale, and personalize digital experiences.

As someone with a background in Computer Science, a Master’s in Data Science and Machine Learning, and Product Management credentials from Stanford, I’ve spent the last decade at the intersection of AI research and product innovation—leading initiatives that span Conversational AI, LLM optimization, multimodal systems, and responsible AI governance.

LLMs: From Intent Recognition to Conversational Orchestration

Large Language Models (LLMs) like GPT-4, Claude, and Gemini have moved far beyond simple intent recognition. They now orchestrate entire conversations, summarize documents, generate code, simulate empathy, and even serve as copilots in decision-making processes. The leap from rule-based systems to transformer-based architectures has enabled these models to understand context, nuance, and user intent with remarkable precision.

At Adobe, we leveraged LLMs to transform our support experience. Instead of routing users through static decision trees, our virtual agents dynamically synthesized knowledge from product manuals, help articles, and user forums. This shift from retrieval-based to generative-based support created a more fluid and intuitive user experience.

Example:

In one deployment, a user asked, “Why is my Photoshop crashing when I use neural filters?” Instead of linking to five different articles, the AI agent summarized the root cause (GPU compatibility), suggested a fix (driver update), and offered a direct download link—all in one response. This reduced resolution time by 40% and boosted CSAT scores by 18%.

Beyond support, LLMs are now being used in marketing copy generation, code refactoring, legal document summarization, and even mental health applications. Their ability to adapt tone, style, and domain-specific language makes them versatile tools across industries.

As Conversational AI continues to evolve, several companies are leading the charge with cutting-edge solutions. If you’re looking to explore the key players shaping this space, check out our curated list of the Top 10 Conversational AI Companies making significant impacts in 2025.

Multimodal AI: Seeing, Hearing, and Understanding

Multimodal models like OpenAI’s GPT-4V, Meta’s ImageBind, and Google’s Gemini 1.5 can process text, images, audio, and video simultaneously. This unlocks use cases in healthcare (radiology reports), retail (visual search), education (interactive tutoring), and creative industries.

The fusion of modalities allows AI systems to interpret complex inputs and generate contextually rich outputs. For example, a model can analyze a medical image, correlate it with patient history, and generate a diagnostic summary. In retail, users can upload a photo of a product and ask questions about its availability, price, or alternatives.

Example:

We built a prototype assistant for creative professionals that could interpret a sketch, understand spoken feedback, and generate design suggestions in real time. A designer could say, “Make this logo more minimalist,” and the assistant would adjust the vector file accordingly—blending visual and linguistic inputs seamlessly.

This kind of multimodal interaction is especially powerful in accessibility contexts. For users with visual impairments, voice and tactile feedback can be combined with image descriptions. For neurodiverse users, multimodal cues can enhance comprehension and engagement.

Multilingual AI: Scaling Across Cultures

Global enterprises face the challenge of serving users in dozens of languages. LLMs trained on multilingual corpora (like mC4, CC100) help, but cultural nuance is key. Language is not just syntax—it’s context, tone, and social norms.

Fine-tuning models for regional dialects, idiomatic expressions, and cultural expectations is essential. This goes beyond translation—it’s about localization. A truly multilingual assistant understands not just what is said, but how it’s meant.

Example:

In our Japanese deployment, we fine-tuned the assistant to handle honorifics and indirect phrasing. A user saying “I wonder if this feature could be improved” was interpreted as a request, not a passive comment. In contrast, our German assistant was optimized for directness and clarity. These adaptations increased engagement and reduced abandonment rates. Multilingual AI also plays a role in inclusivity. By supporting indigenous languages, regional dialects, and minority scripts, enterprises can reach underserved populations and foster digital equity.

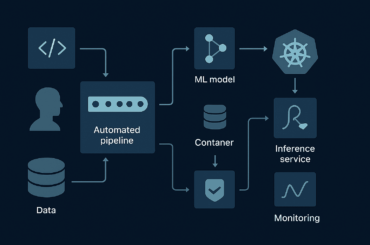

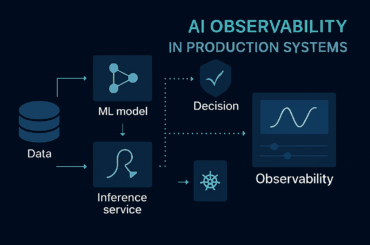

RAG: Grounding Generative AI in Truth

Retrieval-Augmented Generation (RAG) combines semantic search with generative models to produce factually grounded responses. This is critical in domains like legal, finance, and healthcare, where hallucinations can have serious consequences.

RAG systems first retrieve relevant documents from a knowledge base, then use a generative model to synthesize a response. This ensures that outputs are not only fluent but also accurate and traceable.

Example:

In a legal tech pilot, we used RAG to answer questions like “What are the GDPR implications of storing biometric data?” The system retrieved relevant clauses from EU legislation, then generated a summary tailored to the user’s context. This reduced research time from hours to seconds. RAG also supports explainability. Users can trace the origin of a response, view source documents, and assess credibility. This transparency builds trust and facilitates compliance.

Hyperparameter Tuning and Reinforcement Learning

Optimizing LLMs for enterprise use involves more than fine-tuning. Techniques like RLHF (Reinforcement Learning from Human Feedback), PPO (Proximal Policy Optimization), and DPO (Direct Preference Optimization) help align outputs with user expectations.

These methods allow models to learn from human preferences, ethical guidelines, and domain-specific constraints. RLHF, for instance, enables models to prioritize empathy, clarity, or brevity based on feedback loops.

Example:

In an insurance chatbot, we used RLHF to train the model to prioritize empathy and clarity. When a user reported a car accident, the assistant responded with “I’m glad you’re safe. Let’s get your claim started,” instead of a generic form link. This human-centric tone improved retention and trust. Hyperparameter tuning also affects latency, token efficiency, and cost. By optimizing batch sizes, learning rates, and attention mechanisms, we can deploy models that are both performant and economical.

Omnichannel AI Assistants: The Next Frontier

Tomorrow’s assistants will operate across chat, voice, email, and even AR/VR. They’ll remember user preferences (with consent), adapt to device constraints, and deliver proactive support. This continuity across channels creates a unified brand experience.

Omnichannel AI is not just about presence—it’s about coherence. A user should be able to start a conversation on mobile, continue it via smart speaker, and receive follow-up via email without losing context.

Example:

Imagine a user asking a smart speaker, “What’s the status of my refund?” The assistant accesses CRM data, confirms the refund, and sends a follow-up email with the transaction ID. This seamless experience boosts satisfaction and reduces friction.

In AR/VR environments, assistants can guide users through immersive tutorials, product demos, or virtual consultations. This opens new avenues for training, shopping, and collaboration.

Open-Source vs. Commercial AI: Strategic Trade-offs

Open-source frameworks like Hugging Face Transformers, LangChain, and Rasa offer flexibility, transparency, and community support. Commercial platforms like Azure OpenAI, Google Vertex AI, and Anthropic’s Claude provide scalability, compliance, and enterprise-grade tooling.

Choosing between them depends on factors like data sensitivity, latency requirements, and customization needs. Open-source is ideal for rapid prototyping and academic research. Commercial platforms excel in production-grade deployments.

Example:

We prototyped a multilingual assistant using Hugging Face’s mBART, then scaled it using Azure OpenAI for production. This hybrid approach balanced agility with reliability.

Hybrid architectures are becoming common—where open-source models handle edge cases, and commercial APIs manage core workflows. This modularity enables cost control and innovation.

Responsible AI: Governance by Design

As AI systems become more autonomous, ethical considerations must be embedded from the start. This includes bias detection, fairness audits, explainability, and fallback mechanisms.

Responsible AI is not a feature—it’s a philosophy. It requires cross-functional collaboration between data scientists, ethicists, legal teams, and product managers.

Example:

In a healthcare assistant, we flagged any response involving medication or diagnosis for human review. This ensured compliance with medical regulations and protected user safety. We also implemented audit trails, consent management, and adversarial testing. These safeguards help prevent misuse, ensure accountability, and foster trust.

Conclusion: Building the Future Responsibly

The fusion of LLMs, multimodal inputs, and conversational intelligence is transforming how businesses operate. But innovation must be matched with responsibility. My work has shown that success in AI isn’t just about model performance—it’s about trust, transparency, and user-centric design.

As we move into an era of AI-native workflows, the challenge is clear: build systems that are not only smart, but also safe, inclusive, and aligned with human values. The future of AI is not just technical—it’s human. It’s about designing experiences that empower, protect, and inspire. Whether you’re building a chatbot, a virtual tutor, or a creative assistant, the principles remain the same: understand deeply, respond wisely, and evolve responsibly.

FAQs

The key AI trends in 2025 include advancements in Large Language Models (LLMs), multimodal AI systems, conversational AI, Retrieval-Augmented Generation (RAG), and responsible AI governance.

Multimodal AI refers to systems that can process and generate multiple types of data—such as text, images, audio, and video—allowing for richer, more interactive experiences.

Responsible AI involves practices like bias mitigation, human review of sensitive content, transparency through audit trails, and aligning AI outputs with ethical and legal standards.

Yes, LLMs trained on multilingual datasets can handle multiple languages. However, localization is key to capturing cultural context, tone, and intent accurately.

Omnichannel AI assistants will operate across chat, voice, email, and AR/VR, maintaining context and delivering consistent, personalized support across all user touchpoints.